Code

import numpy as np

import pandas as pd

import tensorflow as tf

import matplotlib.pyplot as plt

plt.rcParams['figure.figsize'] = (8, 8)kakamana

April 6, 2023

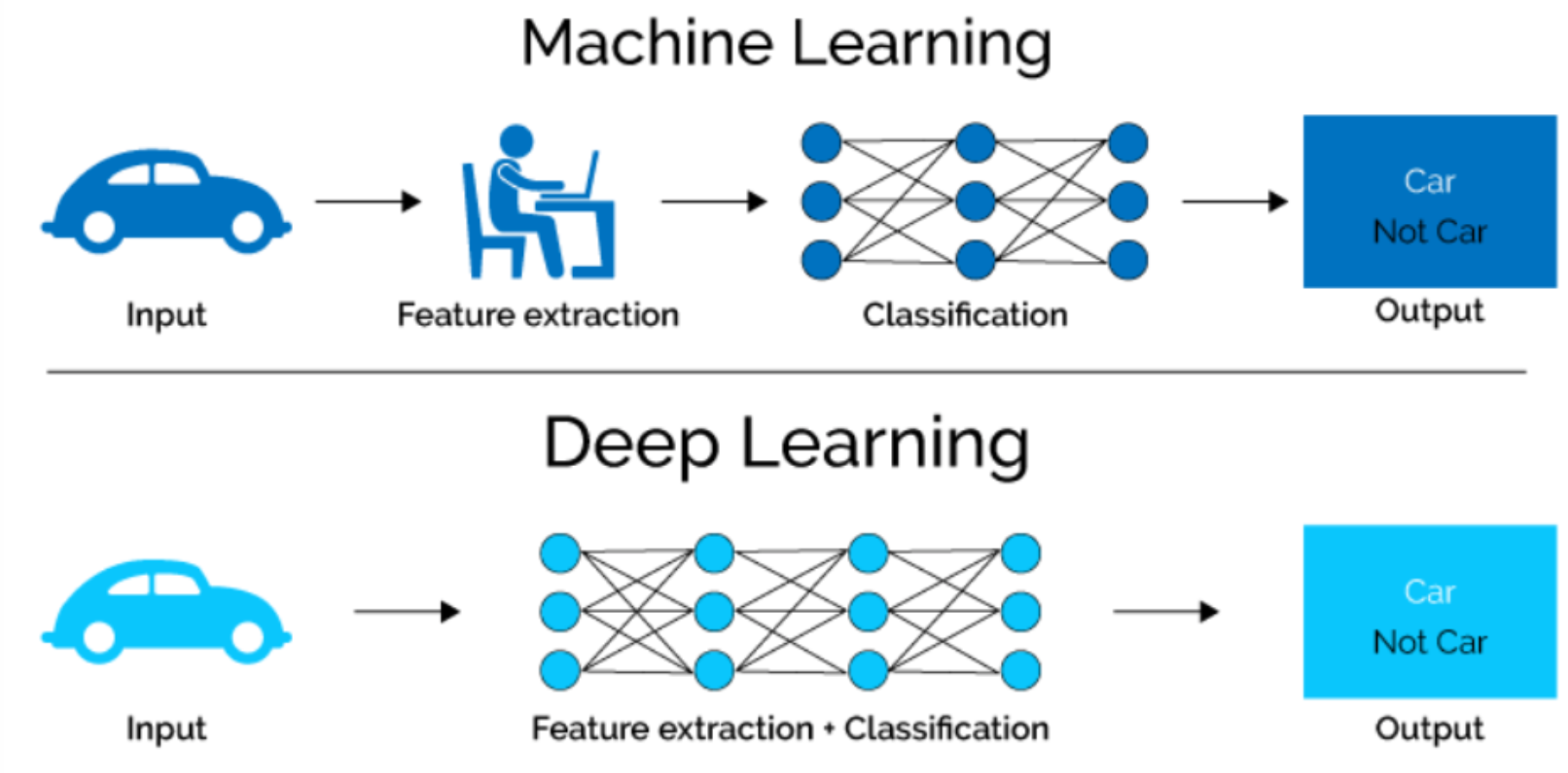

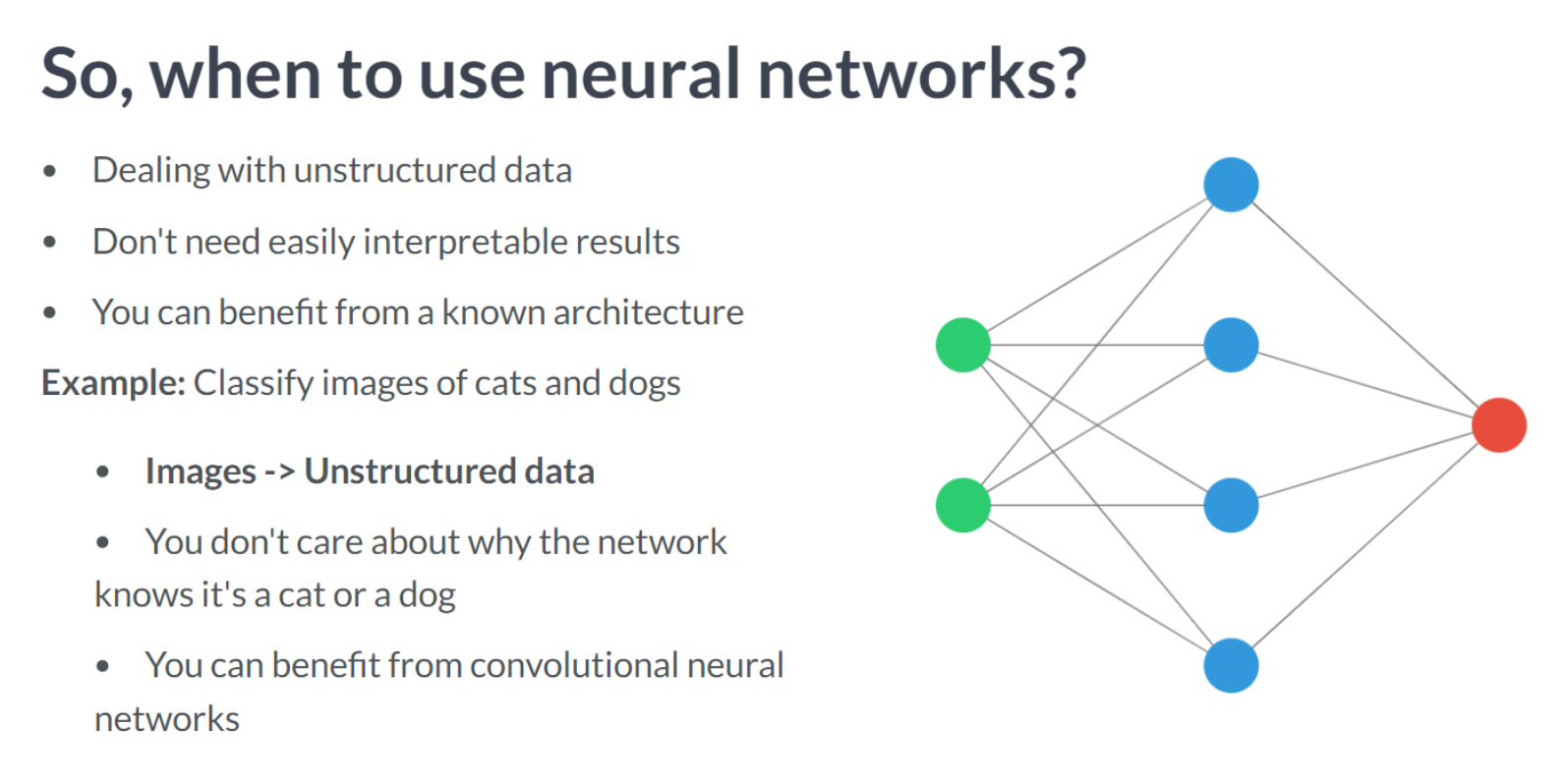

The purpose of this first chapter is to introduce you to neural networks, to understand what kinds of problems they can solve, and when they should be used. Moreover, you will build several networks and save the earth by training a regression model that approximates the orbit of a meteor approaching the earth.

This Introducing Keras is part of [Datacamp course: Introduction to Deep Learning with Keras] There is no denying that deep learning is here to stay! A powerful innovation tool, it is used to solve complex problems arising from unstructured data. It is among the frameworks that make it easier to develop deep learning models, and it is versatile enough to build industry-ready models quickly. In this course, you will learn regression and save the earth by predicting asteroid trajectory, apply binary classification to distinguish real and fake dollar bills, learn to apply multiclass classification to decide who threw which dart at a dart board, and use neural networks to reconstruct noisy images. Additionally, you will learn how to tune your models to enhance their performance during training.

This is my learning experience of data science through DataCamp. These repository contributions are part of my learning journey through my graduate program masters of applied data sciences (MADS) at University Of Michigan, DeepLearning.AI, Coursera & DataCamp. You can find my similar articles & more stories at my medium & LinkedIn profile. I am available at kaggle & github blogs & github repos. Thank you for your motivation, support & valuable feedback.

These include projects, coursework & notebook which I learned through my data science journey. They are created for reproducible & future reference purpose only. All source code, slides or screenshot are intellactual property of respective content authors. If you find these contents beneficial, kindly consider learning subscription from DeepLearning.AI Subscription, Coursera, DataCamp

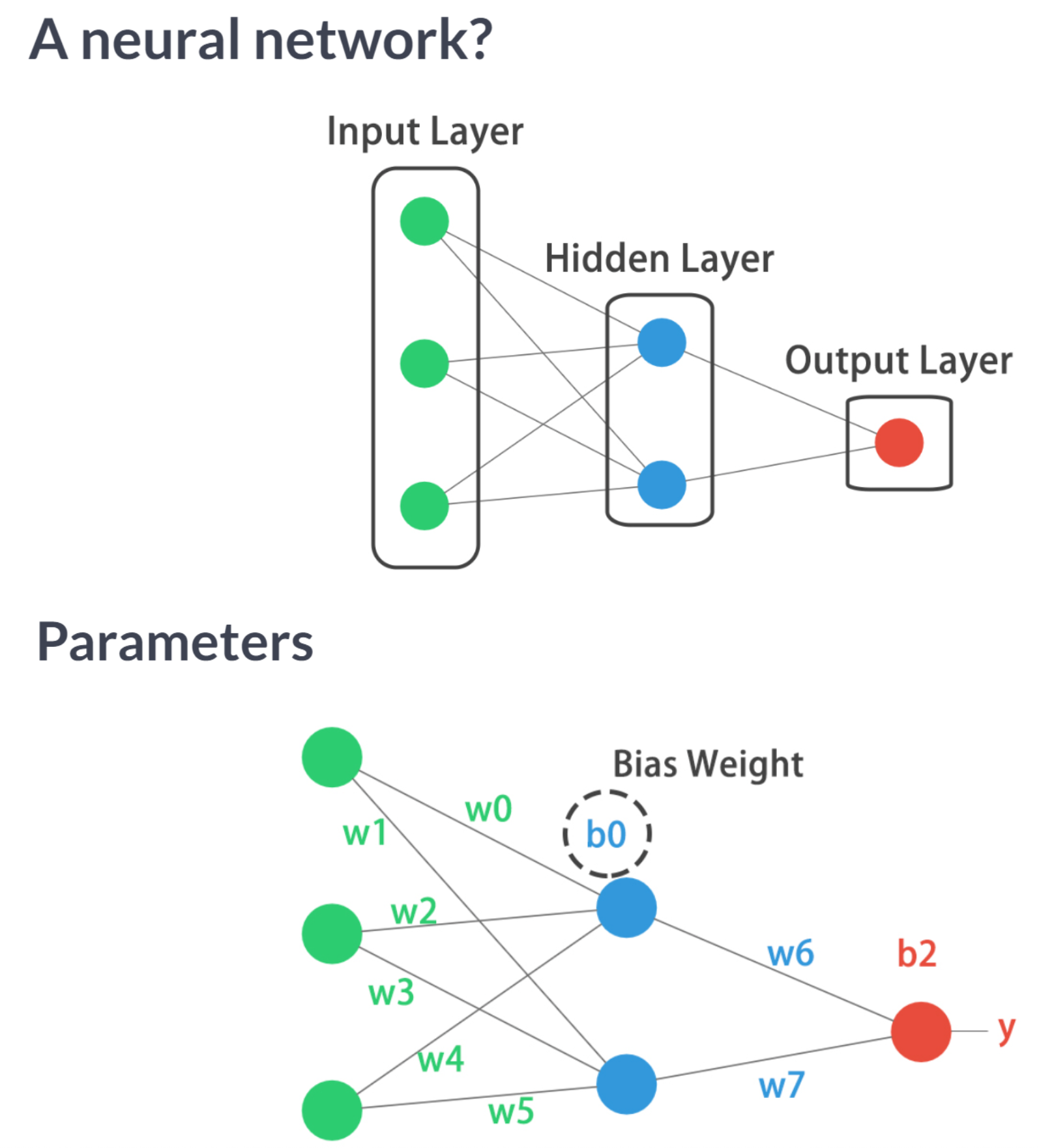

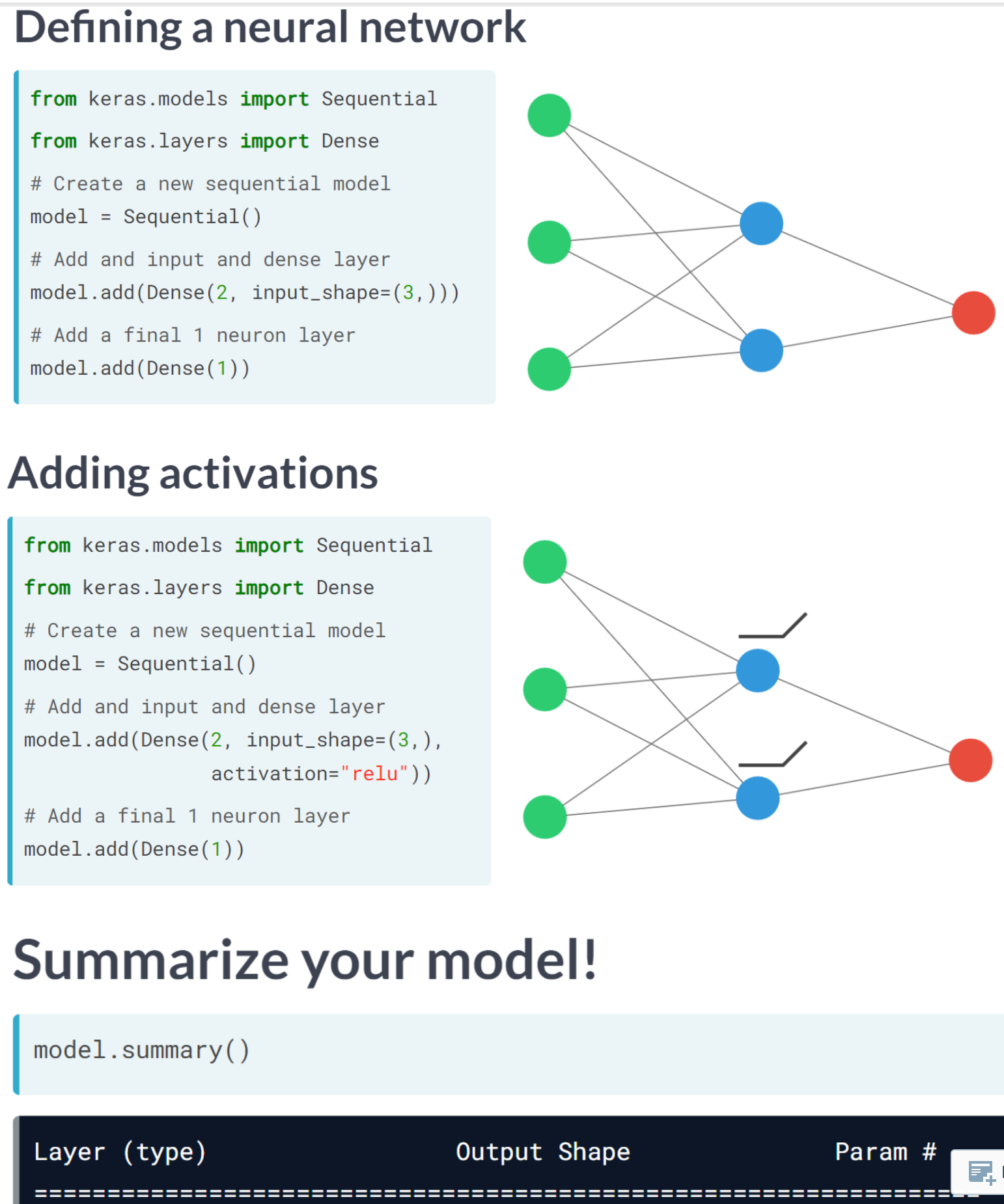

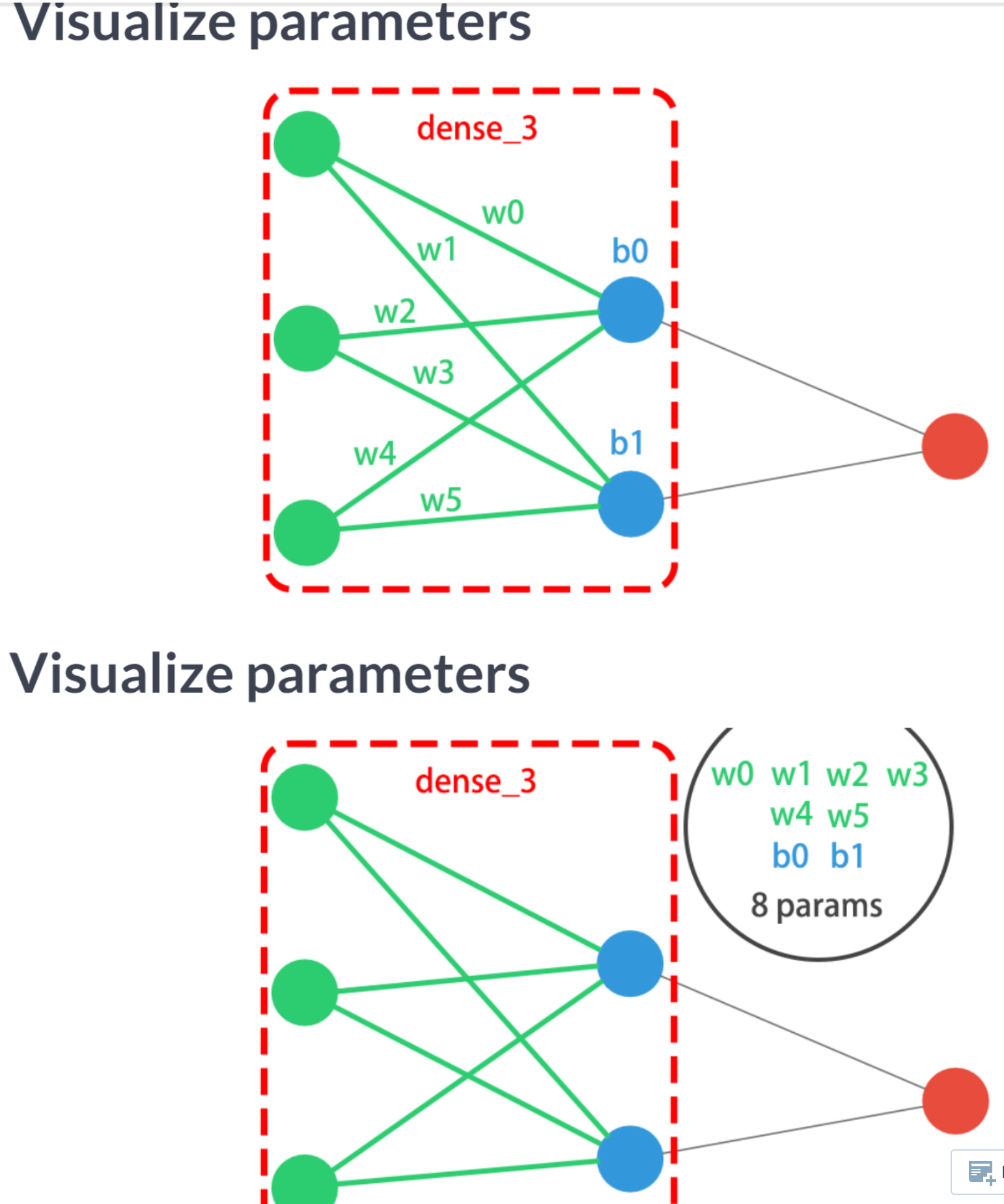

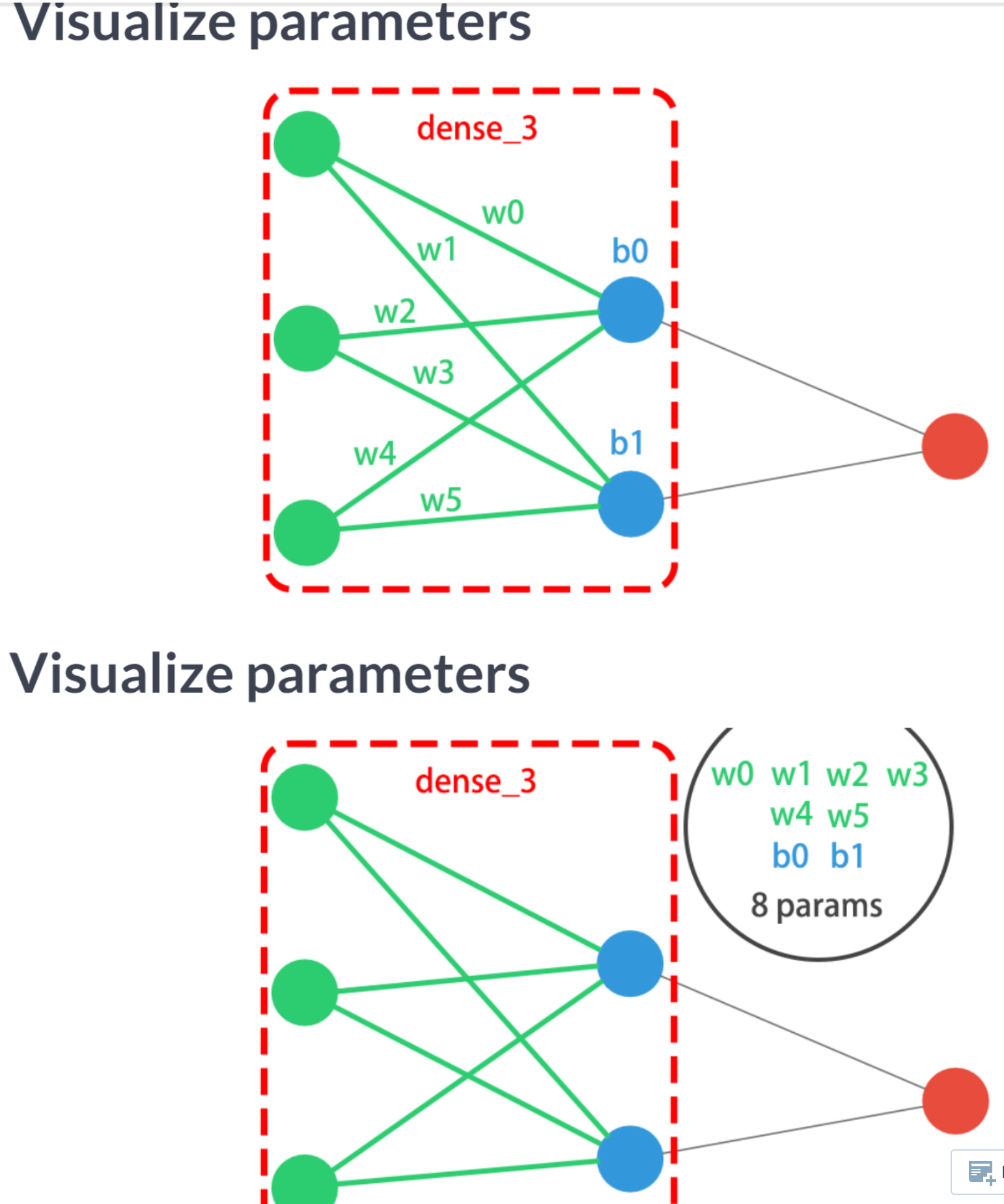

You’re going to build a simple neural network to get a feeling of how quickly it is to accomplish this in Keras.

You will build a network that takes two numbers as an input, passes them through a hidden layer of 10 neurons, and finally outputs a single non-constrained number.

A non-constrained output can be obtained by avoiding setting an activation function in the output layer. This is useful for problems like regression, when we want our output to be able to take any non-constrained value

# Import the Sequential model and Dense layer

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

# Create a Sequential model

model = Sequential()

# Add an input layer and a hidden layer with 10 neurons

model.add(Dense(10, input_shape=(2,), activation="relu"))

# Add a 1-neuron output layer

model.add(Dense(1))

# Summarise your model

model.summary()Model: "sequential_4"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense_8 (Dense) (None, 10) 30

dense_9 (Dense) (None, 1) 11

=================================================================

Total params: 41

Trainable params: 41

Non-trainable params: 0

_________________________________________________________________You’ve just created a neural network. Create a new one now and take some time to think about the weights of each layer. The Keras Dense layer and the Sequential model are already loaded for you to use

This is network you are creating

Model: "sequential_5"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense_10 (Dense) (None, 5) 20

dense_11 (Dense) (None, 1) 6

=================================================================

Total params: 26

Trainable params: 26

Non-trainable params: 0

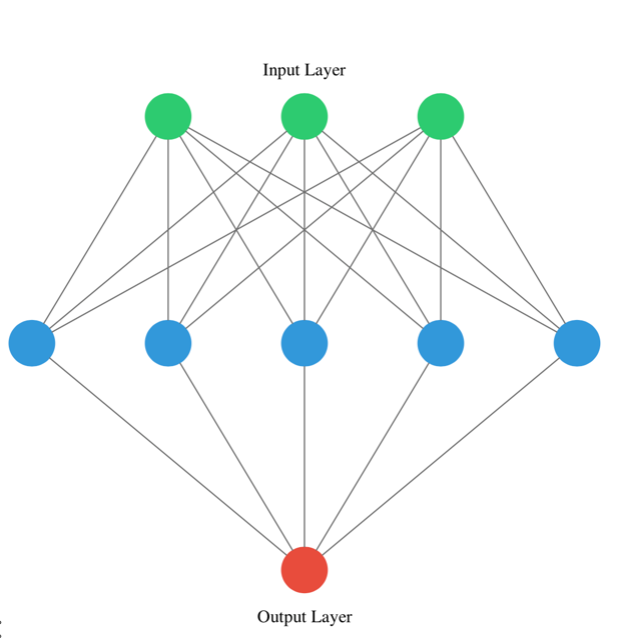

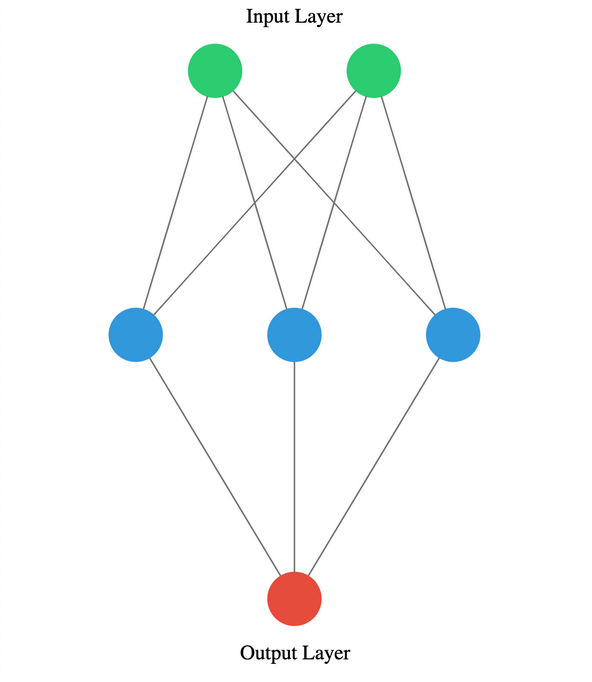

_________________________________________________________________You will take on a final challenge before moving on to the next lesson. Build the network shown in the picture below.

Model: "sequential_6"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense_12 (Dense) (None, 3) 9

dense_13 (Dense) (None, 1) 4

=================================================================

Total params: 13

Trainable params: 13

Non-trainable params: 0

_________________________________________________________________

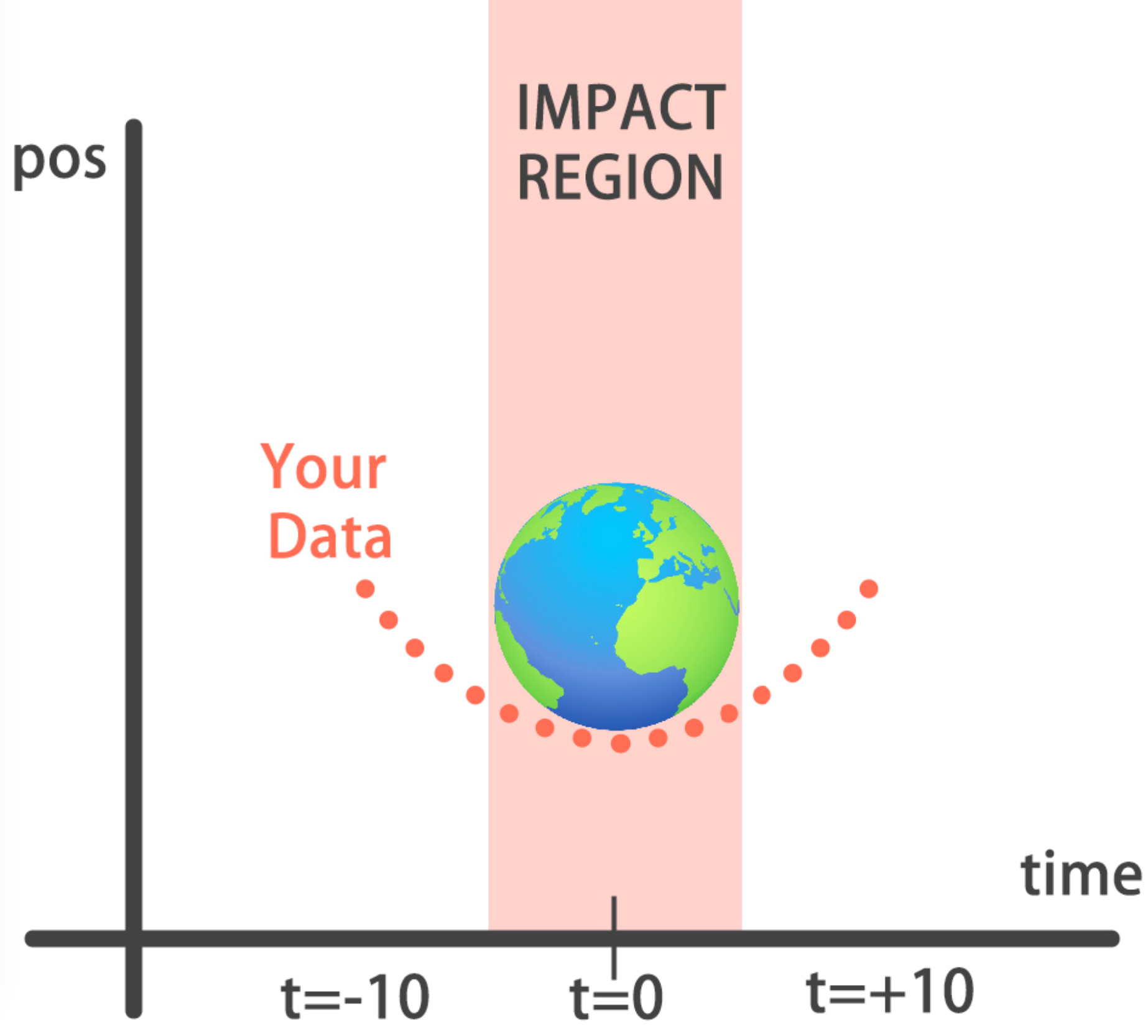

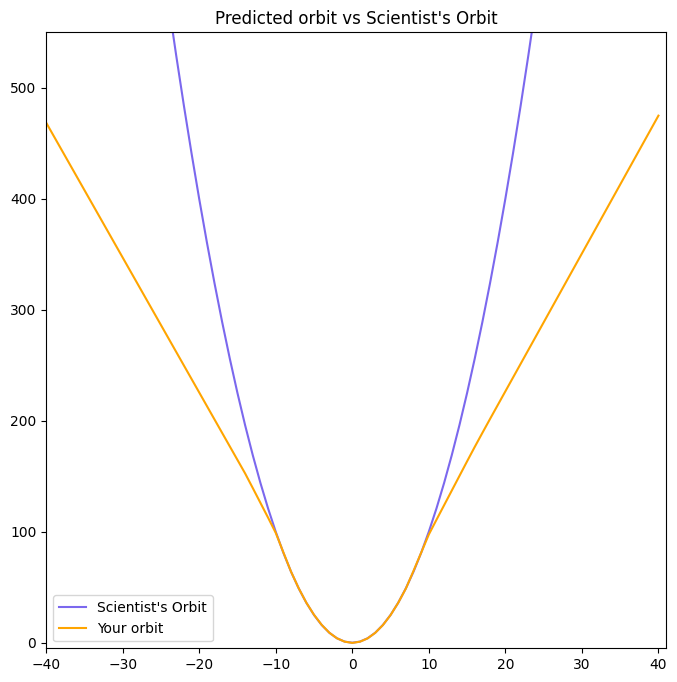

You will build a simple regression model to predict the orbit of the meteor!

Your training data consist of measurements taken at time steps from -10 minutes before the impact region to +10 minutes after. Each time step can be viewed as an X coordinate in our graph, which has an associated position Y for the meteor orbit at that time step.

Note that you can view this problem as approximating a quadratic function via the use of neural networks.

This data is stored in two numpy arrays: one called time_steps , what we call features, and another called y_positions, with the labels. Go on and build your model! It should be able to predict the y positions for the meteor orbit at future time steps.

| time_steps | y | |

|---|---|---|

| 0 | -10.000000 | 100.000000 |

| 1 | -9.989995 | 99.800000 |

| 2 | -9.979990 | 99.600200 |

| 3 | -9.969985 | 99.400601 |

| 4 | -9.959980 | 99.201201 |

model = Sequential()

# Add a Dense layer with 50 neurons and an input of 1 neuron

model.add(Dense(50, input_shape=(1, ), activation='relu'))

# Add two Dense layers with 50 neurons and relu activation

model.add(Dense(50, activation='relu'))

model.add(Dense(50, activation='relu'))

# End your model with a Dense layer and no activation

model.add(Dense(1))

print("\nYou are closer to forecasting the meteor orbit! It's important to note we aren't using an activation function in our output layer since y_positions aren't bounded and they can take any value. Your model is built to perform a regression task")

You are closer to forecasting the meteor orbit! It's important to note we aren't using an activation function in our output layer since y_positions aren't bounded and they can take any value. Your model is built to perform a regression taskYou’re going to train your first model in this course, and for a good cause!

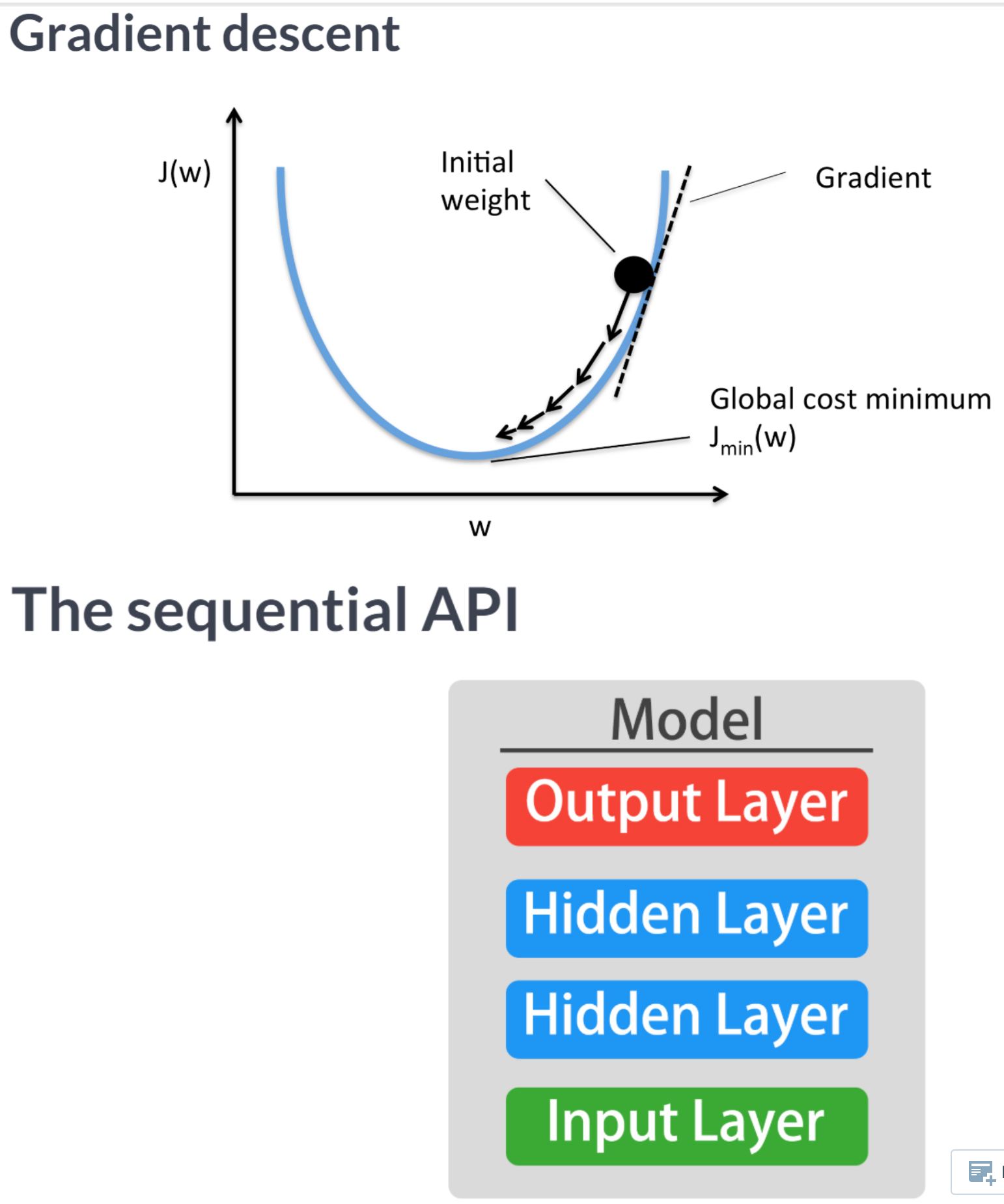

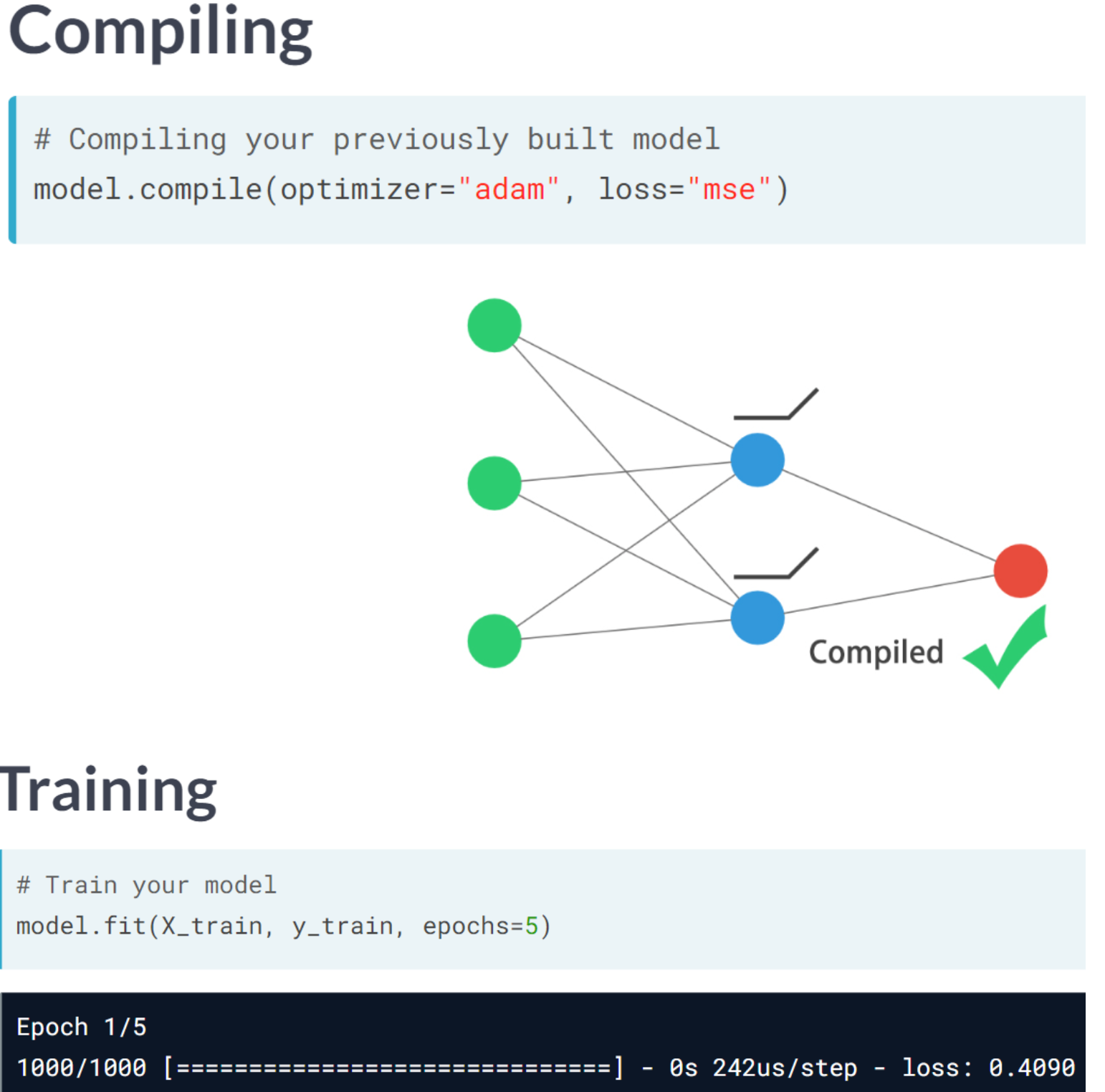

Remember that before training your Keras models you need to compile them. This can be done with the .compile() method. The .compile() method takes arguments such as the optimizer, used for weight updating, and the loss function, which is what we want to minimize. Training your model is as easy as calling the .fit() method, passing on the features, labels and a number of epochs to train for. Train it and evaluate it on this very same data, let’s see if your model can learn the meteor’s trajectory.

model.compile(optimizer='adam', loss='mse')

print('Training started..., this can take a while:')

# Fit your model on your data for 30 epochs

model.fit(time_steps, y_positions, epochs=30)

# Evaluate your model

print("Final loss value:", model.evaluate(time_steps, y_positions))

print("\nYou can check the console to see how the loss function decreased as epochs went by. Your model is now ready to make predictions on unseen data")Training started..., this can take a while:

Epoch 1/30

63/63 [==============================] - 1s 5ms/step - loss: 1555.1772

Epoch 2/30

63/63 [==============================] - 0s 4ms/step - loss: 250.0806

Epoch 3/30

63/63 [==============================] - 0s 4ms/step - loss: 137.0985

Epoch 4/30

63/63 [==============================] - 0s 4ms/step - loss: 117.7206

Epoch 5/30

63/63 [==============================] - 0s 4ms/step - loss: 95.1392

Epoch 6/30

63/63 [==============================] - 0s 4ms/step - loss: 73.8897

Epoch 7/30

63/63 [==============================] - 0s 4ms/step - loss: 53.5082

Epoch 8/30

63/63 [==============================] - 0s 4ms/step - loss: 37.2037

Epoch 9/30

63/63 [==============================] - 0s 4ms/step - loss: 24.1432

Epoch 10/30

63/63 [==============================] - 0s 4ms/step - loss: 15.4662

Epoch 11/30

63/63 [==============================] - 0s 4ms/step - loss: 9.9149

Epoch 12/30

63/63 [==============================] - 0s 4ms/step - loss: 7.0810

Epoch 13/30

63/63 [==============================] - 0s 4ms/step - loss: 4.8009

Epoch 14/30

63/63 [==============================] - 0s 4ms/step - loss: 3.5325

Epoch 15/30

63/63 [==============================] - 0s 4ms/step - loss: 2.7641

Epoch 16/30

63/63 [==============================] - 0s 4ms/step - loss: 2.1755

Epoch 17/30

63/63 [==============================] - 0s 4ms/step - loss: 1.5552

Epoch 18/30

63/63 [==============================] - 0s 4ms/step - loss: 1.2003

Epoch 19/30

63/63 [==============================] - 0s 4ms/step - loss: 1.1083

Epoch 20/30

63/63 [==============================] - 0s 4ms/step - loss: 0.8658

Epoch 21/30

63/63 [==============================] - 0s 4ms/step - loss: 0.6793

Epoch 22/30

63/63 [==============================] - 0s 4ms/step - loss: 0.5371

Epoch 23/30

63/63 [==============================] - 0s 4ms/step - loss: 0.4561

Epoch 24/30

63/63 [==============================] - 0s 4ms/step - loss: 0.4162

Epoch 25/30

63/63 [==============================] - 0s 4ms/step - loss: 0.3474

Epoch 26/30

63/63 [==============================] - 0s 4ms/step - loss: 0.3001

Epoch 27/30

63/63 [==============================] - 0s 4ms/step - loss: 0.3633

Epoch 28/30

63/63 [==============================] - 0s 4ms/step - loss: 0.2325

Epoch 29/30

63/63 [==============================] - 0s 4ms/step - loss: 0.1830

Epoch 30/30

63/63 [==============================] - 0s 4ms/step - loss: 0.1686

63/63 [==============================] - 0s 3ms/step - loss: 0.1349

Final loss value: 0.13486835360527039

You can check the console to see how the loss function decreased as epochs went by. Your model is now ready to make predictions on unseen data2023-04-06 07:31:30.483461: W tensorflow/tsl/platform/profile_utils/cpu_utils.cc:128] Failed to get CPU frequency: 0 HzYou’ve already trained a model that approximates the orbit of the meteor approaching Earth and it’s loaded for you to use.

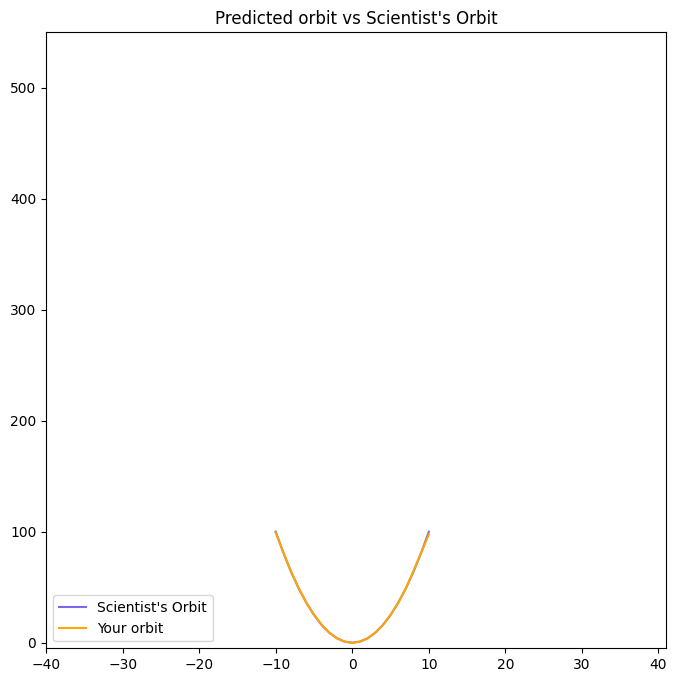

Since you trained your model for values between -10 and 10 minutes, your model hasn’t yet seen any other values for different time steps. You will now visualize how your model behaves on unseen data.

Hurry up, the Earth is running out of time!

Remember np.arange(x,y) produces a range of values from x to y-1. That is the [x, y) interval.

def plot_orbit(model_preds):

axeslim = int(len(model_preds) / 2)

plt.plot(np.arange(-axeslim, axeslim + 1),np.arange(-axeslim, axeslim + 1) ** 2,

color="mediumslateblue")

plt.plot(np.arange(-axeslim, axeslim + 1),model_preds,color="orange")

plt.axis([-40, 41, -5, 550])

plt.legend(["Scientist's Orbit", 'Your orbit'],loc="lower left")

plt.title("Predicted orbit vs Scientist's Orbit")1/1 [==============================] - 0s 58ms/step

3/3 [==============================] - 0s 11ms/step

print("\nYour model fits perfectly to the scientists trajectory for time values between -10 to +10, the region where the meteor crosses the impact region, so we won't be hit! However, it starts to diverge when predicting for new values we haven't trained for. This shows neural networks learn according to the data they are fed with. Data quality and diversity are very important.")

Your model fits perfectly to the scientists trajectory for time values between -10 to +10, the region where the meteor crosses the impact region, so we won't be hit! However, it starts to diverge when predicting for new values we haven't trained for. This shows neural networks learn according to the data they are fed with. Data quality and diversity are very important.